Date: 10/02/2025

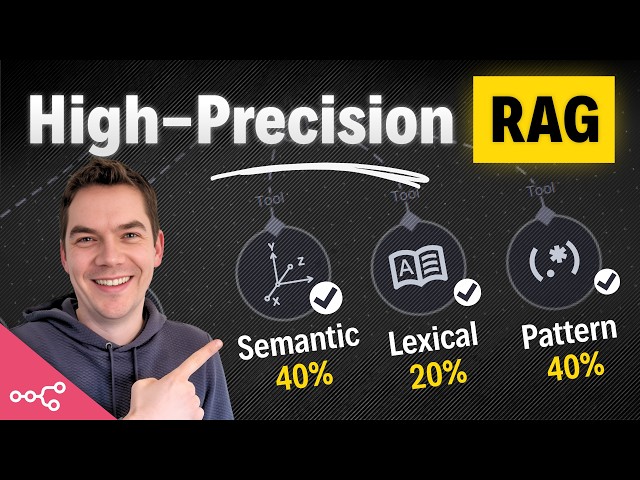

Okay, this video on building a Hybrid RAG Search Engine in n8n is exactly the kind of thing that gets me fired up about the future of development. We’re talking about moving beyond simple vector embeddings for Retrieval Augmented Generation (RAG) and into a more robust, real-world applicable search solution. It walks you through combining dense embeddings (semantic search), sparse retrieval (BM25, lexical search), and even pattern matching within an n8n workflow using Supabase and Pinecone. The coolest part? It dynamically weights these methods based on the query type. Forget AI hallucinations!

Why is this valuable for us transitioning to AI-enhanced development? Because it addresses a very real problem: vector search alone often fails for exact matches and specific details. As someone who’s struggled with searching through piles of documentation and code using just vector databases, I can attest to that! This approach of hybrid search, especially the dynamic weighting, aligns perfectly with the kind of automation and intelligent workflows I’m aiming to build. Think about applying this to customer support bots that need to accurately find product information, or internal knowledge bases that require precise code snippet retrieval.

Seriously, the idea of programmatically shifting search strategies based on the question being asked is a game-changer. I see this as a concrete step towards building truly intelligent and adaptable AI agents. Reciprocal Rank Fusion (RRF) isn’t something I’ve used extensively, but I can already think of 10 different applications for my clients to build a better search. I’m definitely going to be experimenting with this setup – n8n, Supabase, and Pinecone are all tools I’m familiar with, so the barrier to entry is pretty low and the potential payoff is huge. It’s time to stop relying on “good enough” vector search and start building something truly intelligent!